Findmypast (FMP) released the 1921 Census of England & Wales at midnight on Jan 6th 2022 to an eager community of genealogists. The preparation of the census - preservation, digitisation and transcription took three years of hard work. Aside from the data preparation, we also had technical challenges to address. Specifically, could our services deal with the projected increase in users for the first few days of 1921 launch period?

This post is the first in a series that details how we approached scaling our service to deal with a projected day one 12x increase of users.

In the beginning…

..way back in Nov 2020 the release of the 1921 census was just over 13 months away. Within engineering, our thoughts started to turn to ensuring that Findmypast was fit to meeting the anticipated increase in load. We knew from previous incidents that the reliability and performance of some of the services can cause issues when under load, so, within engineering, we started a scaling working group to address not only these specific issues, but to prove to management and our external stakeholders that the system as a whole was resilient and could perform well under load.

The expectations for the working group was to:

- Champion performance improvements within the team. That is, evangelise scaling to the team, make sure that backend improvement work was added to backlogs, scheduled into sprints, etc.

- Ensure that the key metrics for each service owned by the team were correctly instrumented, visualised and alerted upon.

- Schedule time for chaos and stress testing team services.

The initial meeting set the goals and expectations for the team and also discussed a few incidents that caused a site outages due to cascading failures and the like. Essentially, we highlighted the things that have gone wrong in the past and the discussed areas that would have limited the blast impact of the outage. We also re-visited our documentation, we have almost 140 micro services running in Kubernetes (K8s) and understanding their relationship to other services was key, especially to those services that run outside of K8s, such as databases, message queues, etc.

The group met again in January 2021 and bi-weekly after that. Teams reported back into the group progress on performance improvements as well as progress on stress and chaos testing.

Stress testing

Internally, we developed a tool to help developers stress test their services. Originally, named Sylvester, the tool was a wrapper around Taurus from BlazeMeter which allowed us to easily run a load test either locally, from inside of K8s and could also be imported directly into BlazeMeter and executed from there.

Teams were responsible for stress testing their own services directly against production. Our staging environment is a not a like for like match of production, so stress testing on that environment would not have given us any helpful insight into how the services would perform on the production environment.

At this point in time, all the testing was internal, directly against the services. There was no outside in testing, but there were plans to introduce that later in the year.

Teams were advised to add low load during the first few tests and gradually, over the coming weeks, discover and fix performance problems and add extra load once performance targets were met.

Chaos testing

We also encouraged the teams to test how their services deal with failures. For example, how well did the service deal with slow response times from upstream services? Or, broken network connections? We needed the services to fail gracefully and not contribute to a cascading failure.

Chaos testing was performed on our Kubernetes staging environment using the Chaos Mesh tool. At the time, the tool was not production ready but it was suitable for use on our staging environment.

Findings

Teams were actively finding and making service improvements throughout the process. During the first few months we identified some key areas that needed addressing:

-

Findmypast does not run on the cloud, but instead we manage the service via hardware hosted at our data centers. Searching for records is powered behind the scenes by Apache SOLR clusters. We recognised that our current SOLR cluster would struggle to meet the anticipated demand, so extra hardware was brought in to help spread the load. Additionally, we worked with our data center partners to spin up a SOLR cluster within AWS. If necessary, we could extend the on-premise SOLR cluster into AWS, giving us extra capacity if required. Additionally, our search team worked hard to update and re-configure the SOLR cluster, giving us the best possible performance from the existing hardware.

During the launch day, at peak we had 17,400 users/min using the site, the median latency for searches was 198ms. This increased to an average of 1.75 secs for the 99th percentile.

-

A large project was also underway to migrate our in-house e-commerce solution to a 3rd party e-commerce partner. Ensuring that the migration was not only successful but performant was a key deliverable for the team.

-

One of our internal services - named Antracks - queried a number of other service via REST API alls. Antracks was a central service and is called for most user journeys through the site. Antracks would asynchronously call out to nine other services to grab the data it needed. Given it’s reliance on other services, Antracks was only as fast as the slowest service. Even with aggressive caching strategies, circuit breakers, etc it was clear that Antracks would not be able to achieve the performance we were looking for. In fact, Antracks increased load on the other nine services, causing them to suffer slower response times. This in turn cascaded the slower response times back to the Antracks consumers. The decision was taken to replace Antracks with a new system that used Kafka events to notify Antracks when key data has changed. The change of design from a pull model to a push model dramatically reduced Antracks response times as well as reducing load throughout the system.

During the 1921 launch day, at peak we had 17,400 users/min using the site, the new Antracks system reported a 99th percentile average latency of 20ms (with a max of 36 ms during the period).

A success story

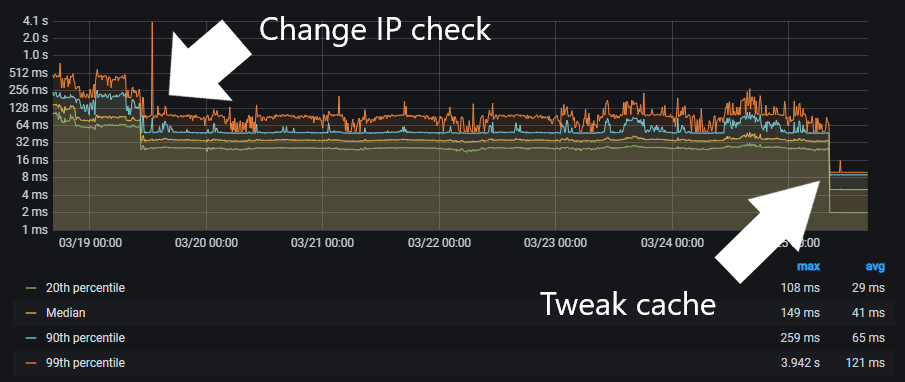

Before we finish off this post, we thought it would be interesting to show you how small changes can make big differences to service response times. This is an extreme example - not all of our services have shown this drastic improvement - but the graph below was used to illustrate to the teams at the bi-weekly meetings that if you look (instrument your code!) you’ll uncover areas that can be improved.

The service is called Sphinx and one of the functions it needs to perform is whether an IP address is within a CIDR range. The graph below shows latency of the service (under heavy load) after making two small changes. (BTW, the graph is logarithmic, not linear.)

The first big change was reducing the time taken to check if the IP address is within a specific range. The 99th percentile dropped from a maximum of 512ms (!) to ~128ms. The median response dropped from 128ms to 32ms. The problem was a 3rd party module that was used to perform the IP check and, under load, it really struggled to perform. We replaced that call with our own solution and that made a big difference.

The second difference was due to the way the cache was implemented. A few small tweaks was all it took to decrease the 99th percentile down to ~16ms, a big drop from 512ms a few days before!

Conclusion

FMP engineering department was well aware of the scaling challenges ahead of us and started 12 months before the launch of 1921 to give us ample time to prepare for launch. Initially, teams tested their services in isolation. By the end of March most teams had a suite of tests available and were running tests. The next step was to ramp up the testing. The next blog post describes how we kicked off stress testing “game” days, the issues we discovered and we how we fixed them.

Get in touch

We are always looking for engineers to join our team so if you’re interested contact us or check out our current vacancies.